Imagine a grand orchestra spread across distant cities. Each musician practices their piece without ever meeting the others. No one sends their sheet music anywhere. Instead, they share only the notes they improve, the patterns they refine and the harmonies they discover. Yet when the conductor gathers all these insights, the final symphony becomes richer than anything a single musician could have created alone.

Federated generative learning mirrors this distant orchestra. It allows models to be trained across scattered datasets that never leave their origins. The knowledge travels, but the data stays home. This approach has become a quiet revolution in the way sensitive information is protected while still empowering advanced generative capabilities. Learners who explore emerging architectures in a gen AI course often encounter this technique as a foundation for responsible innovation.

The Need for Distributed Learning

In the modern data landscape, information resembles rare artifacts kept securely in private vaults. Hospitals protect patient histories, banks guard financial records and mobile devices hold personal archives. Transporting these artifacts to a central repository is risky and often prohibited.

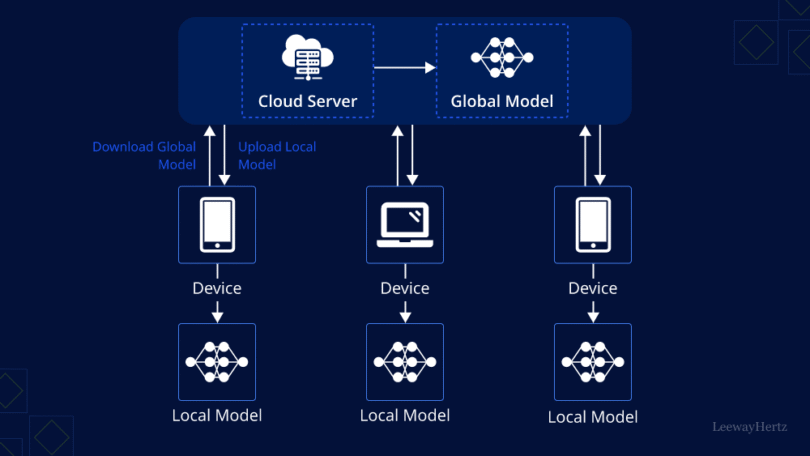

Federated generative learning was created for exactly these closed environments. Each data source trains a local model on site. Only the learned patterns, represented as encrypted gradients or refined parameters, travel to a central aggregator. The original data is untouched and unmoved.

This method ensures that organisations contribute intelligence without compromising trust. It also allows generative models to learn from wide ranging patterns scattered across geographies, cultures and demographics. Instead of building intelligence from a narrow sample, the model grows from a mosaic of distributed contexts.

How Collaboration Happens Without Exposure

The elegance of federated generative learning lies in the way it shares wisdom without exposing the source. When a device or institution trains its local model, it sends back only mathematically transformed updates. These updates are combined with those from other sources, creating a harmonised global model.

Picture several artists sculpting pieces of clay in separate studios. They never exchange their clay, yet they share the impressions left by their tools. The master sculptor collects these impressions and shapes a universal masterpiece from them.

To strengthen privacy further, techniques like secure aggregation ensure that no individual update can be traced back to its origin. Differential privacy introduces carefully controlled noise, protecting users while still enabling valuable learning. These layered protections ensure that even if the aggregated updates are intercepted, they reveal nothing personal or sensitive.

Generative Power Without Centralised Risk

Federated generative learning does more than protect privacy. It enhances creativity in environments where centralised data would normally limit progress. Generative models, especially those used to craft text, images, audio or simulations, thrive on exposure to diverse patterns.

In traditional training, organisations may be forced to choose between safety and innovation. Federated generative learning removes that compromise. It allows the global model to absorb complex, domain specific nuances across many institutions. Financial behaviour patterns from different regions, medical imaging variations from multiple hospitals or cultural language shifts from widespread user groups all contribute to a broad generative capability.

The model becomes more inclusive, fairer and more flexible. It benefits from insights that would otherwise remain locked behind data silos. This approach aligns closely with the principles of modern responsible AI design, where creativity must coexist with strong ethical boundaries.

Real World Applications Across Sectors

Healthcare has become a leading adopter of federated generative learning. Hospitals often cannot share raw medical data due to strict legal frameworks. Yet generative models trained through federated architectures can learn to synthesise medical images, predict disease signatures and enhance diagnostic workflows without compromising patient confidentiality.

In finance, institutions use federated generative models to simulate fraud scenarios, craft synthetic transaction histories and improve risk forecasting. These simulations help organisations prepare for emerging threats while ensuring that sensitive customer information remains protected.

On personal devices, federated learning supports generative keyboards, personalised content recommendations and privacy preserving voice assistants. Every device contributes local improvements that help refine the global model, creating a powerful network of shared learning without leaking private behaviour. Professionals exploring practical frameworks through a gen AI course often find federated learning central to future production systems.

The Road Ahead

As generative systems grow in scale and influence, the need for decentralised and secure learning only intensifies. Future research is moving toward homomorphic encryption, where calculations can be performed on encrypted data, and toward adaptive federated architectures that adjust to network conditions and data diversity.

Another key shift lies in trust calibration. Organisations and regulators want reassurance that federated learning protects all parties involved. This has led to the development of auditable federated protocols, cryptographic guarantees and transparent monitoring layers. The objective is not just privacy preservation but also accountability in shared learning environments.

Conclusion

Federated generative learning is transforming how intelligence is built. It makes collaboration possible across locked down datasets and enables creative systems to grow without ever touching private information. Like musicians performing from distant cities, each participant contributes insights that enrich the shared symphony.

As this technique matures, it will define the next generation of secure and responsible generative technologies. It represents a new way to innovate, one that keeps human trust at its core while enabling expansive learning across borders and industries.

Leave a Comment